Imperial physics grad Nishant Prasad explains what an artificial neural network is and what it does

In recent years, artificial intelligence (AI) and lawtech have been some of the hottest commercial awareness topics among students seeking to enter the legal profession.

Last month, at Legal Cheek‘s first student event of the autumn, over 250 students assembled at Clifford Chance’s office in Canary Wharf to hear insights from lawyers and legal project managers working on the frontline of these challenges. During her thought provoking presentation, associate Midori Takenaka presented two images on-screen, which she described as “what comes up when you Google what does artificial intelligence look like?”

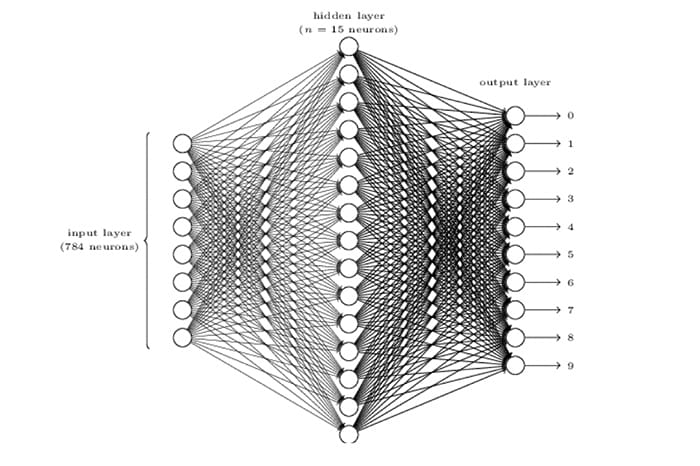

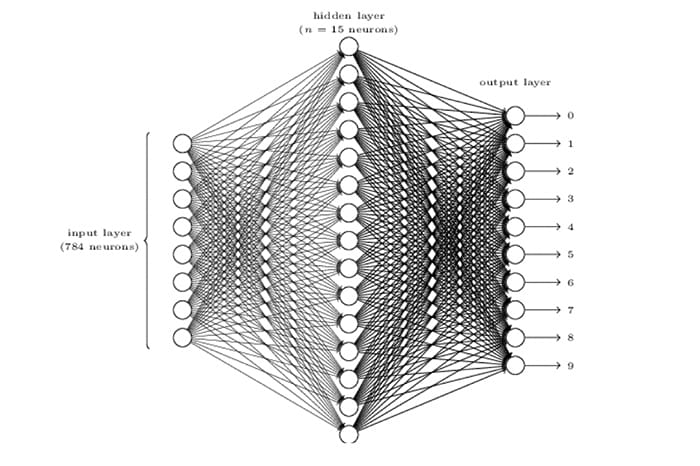

One of these images, shown below, was a diagram of an artificial neural network:

Before we discuss what an artificial neural network is and more importantly what it does, let’s start by exploring a set of problems that neural networks can help solve.

Text recognition

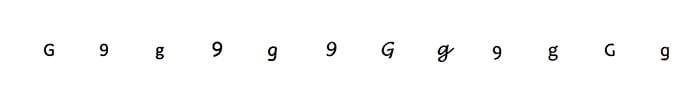

Consider the following list:

As you read across, your mind is quickly identifying shapes and accurately characterising them as either the number 9 or the letter ‘g’. While this may seem a relatively trivial task for a human, creating a machine that can do this visual recognition and cognition is extremely difficult, all the more so at human speed! While computers are exceptionally good at maths, they are still poor at abstraction, interpretation and cognition.

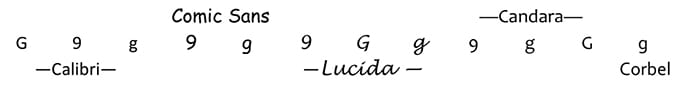

We display the above list again, only this time having labelled the fonts:

You might first think we could have computers overcome this problem by trying to code algorithms to characterise text based upon its shape. Unfortunately, we can’t just say that the number 9 is a circle in the top half attached to a tail that goes down from the right-hand side and call it a day. After all, such a description would mischaracterise the letter ‘g’ in Comic Sans as the number 9.

You might then think that we could adopt a ‘lookup table approach’ where we show a computer what the number 9 looks like in all the different fonts that exist and then ask it to see if a future digit looks like it is one of those. Unfortunately, this approach has three major problems:

1. The first is that it is effectively impossible to create a table with an exhaustive list of fonts. This approach breaks down when it sees a new character it has never seen before. This reason is partly why optical character recognition (OCR) software has found accurately identifying handwriting to be so difficult.

2. Second, the similarities between different characters and fonts lead to inaccuracies. For example, the letter ‘g’ in Comic Sans looks more like the number 9 in Calibri and Lucinda Handwriting, than it does to the letter ‘g’ in Calibri, Candara or any uppercase ‘G’.

3. Finally, it’s also very inefficient.

When you’re reading through the text, say the digit ‘7’ in this sentence, the mind isn’t looking at each character and comparing it to every 7 that you’ve seen before. Instead, the brain has been trained to recognise that the common patterns that occur in all the 7’s you have seen before and compare an image against these patterns. In addition, the mind is also using the other information around each character, such as the word ‘digit’ to help provide contextual clues. Therefore, if we are trying to get computers to accomplish text recognition, one that humans can perform effortlessly, it is a good idea to try and simulate how the brain works, which we cover next.

At its core, the central nervous system is built around a network of electrically excitable cells called neurons. These cells receive input(s), and if the ‘strength’ of these inputs is sufficient, the neurons ‘fire’ an electrical signal. This output propagates through the network, and collectively this neural network is responsible for human cognition.

Artificial neurons

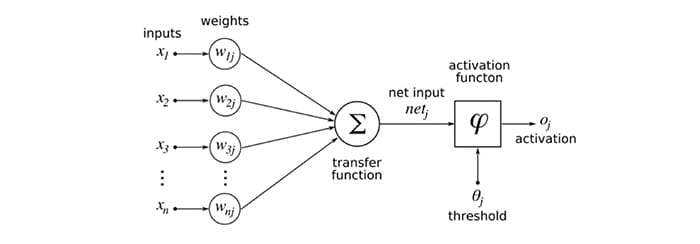

As the name suggests, an artificial neural network is a computer program designed to model this process. Like its biological counterpart, fundamental blocks called neurons assemble together to form an artificial neural network. While the system as a whole may be very complex, an individual artificial neuron, shown below, is relatively easy to understand.

At its most basic level, all an artificial neuron does is count and check. It takes in a bunch of numbers, adds them together and checks to see if the sum is bigger than another number. If the sum is larger, the neuron transmits a signal, if it the sum is less, the neuron doesn’t do anything.

More prosaically, as we move from left to right, in the first column, we have a list of inputs that go into the neuron. These inputs are multiplied by different weights, shown in the second column, depending upon from where the input came.

An analogous way of thinking about this weighting is the ‘bass boost’ function on a music player. This function makes sound at the lower end of the frequency spectrum (bass) louder than sound at the higher end (treble). Another way of looking at it is currency conversion. You can’t add €100 and £50 together to give $150; you have to first multiply these separate currencies by a conversion factor ($1.15 per € and $1.31 per £) to provide $180.

These weighted contributions are then added together. If the sum of this net total is bigger than a predefined threshold, then the neuron sends out a predetermined output.

This can be thought of as like the ‘binary bonus’ Clifford Chance offers to its newly qualified (NQ) associates. You get the same bonus as long you meet the threshold. Whether you just meet the threshold or exceed it by 50%, the amount paid out (output) is always fixed.

Linking a set of neurons together in a network helps these simple objects solve complex problems.

Neural networks

We turn back again to the example neural network that Midori displayed on screen.

This specific diagram is a visual representation of a neural network that attempts to identify and characterise the number in an image. We can break down this image into the following parts:

1. On the left-hand side, there is an input layer of 784 neurons. This layer is the equivalent of breaking down an image into a 28 by 28 square, since 28 squared = 784.

2. On the right-hand side, there are 10 neurons in the output layer which correspond to the digits 0 through to 9.

3. The middle layer is where the exciting stuff happens. These are the ‘hidden’ layers in an artificial neural network.

Hidden layer design

We will only be scratching the surface of these layers but will cover some of their key features. In reality, a neural network would have multiple hidden layers between the output and the input. A network for identifying a single digit would have around 30 layers. This use of multiple hidden layers is partly from where the ‘deep’ in deep learning comes.

In these hidden layers, the precise number of neurons, or the mathematical equation for how they fire isn’t necessary knowledge at this point. What is important is that these neurons all have different sets of input connections and all have a range of thresholds.

This architecture ensures that as the inputs from the left-hand side change, dramatic changes occur in how subsequent neurons in the hidden layers react. These changes then propagate forward through the network towards the outputs, and this process helps facilitate pattern recognition.

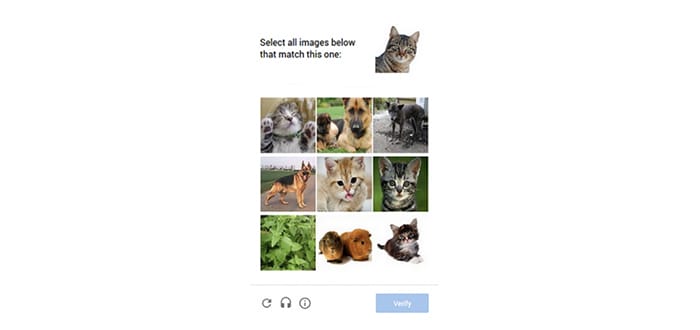

By passing prelabelled inputs through the network, these networks can be ‘trained’ to recognise patterns in a process called ‘supervised learning’. Every time you complete a ‘reCAPTCHA’, such as the one shown below, you are helping to train a neural network.

In the above case, we would be labelling an inputted image as either ‘cat’ or ‘not cat,’ and help a neural network do the same.

Another significant idea is the use of multiple neurons in each layer. As we outlined earlier, it’s not easily possible to design a simple algorithm to recognise shapes and characterise them as numbers. Instead, by using multiple neurons in each layer allows different parts of the image to be analysed in parallel. This approach helps dramatically speed up the process.

Clifford Chance associate Midori Takenaka on the ethical debate surrounding the use of AI

Nishant Prasad is a former physicist at Imperial College London and is currently studying the Graduate Diploma in Law at BPP University Law School.

Please bear in mind that the authors of many Legal Cheek Journal pieces are at the beginning of their career. We'd be grateful if you could keep your comments constructive.