Future magic circle trainee William Holmes considers whether ‘mutant algorithms’ should have their day in court, following this summer’s A-Level exam results fiasco

Can a statue be responsible for murder? Ancient Greek lawyers successfully persuaded jurors that it could be.

In 5th century BC, a bronze statue of the star heavyweight boxer Theagenes of Thasos (known as the “son of Hercules” by his fans) was torn down by some of his rivals. As the statue fell, it killed one of the vandals. The victim’s sons took the case to court in pursuit of justice against the murderous statue. Under Draco’s code, a set of ancient Greek laws famed for their severity, even inanimate objects could feel the sharp edge of the law. As a result, Theagenes’s statue was found guilty and thrown into the sea.

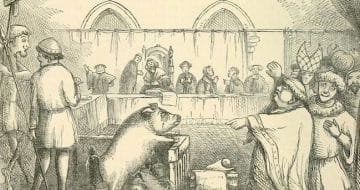

The practice of allocating legal responsibility to objects did not end with the ancient Greeks, but continued in the 11th century in the form of deodands. Deodands are property that have committed a crime. As punishment, the offending property would be handed over to the relevant governing body so that it could be repurposed for a pious cause. Normally, criminal objects were sold and the money was used for some communal good (in theory at least). Deodand status remained until 1846 in the UK and still exists in the US today, last being referenced by the US Supreme Court in 1974.

The rationale behind such seemingly nonsensical allocations of responsibility is the aim of providing a sense of justice in society: nothing can escape the grasp of law and justice. Today, there are new objects that are a source of public outrage: computers. The algorithms inside computers have been found to create certain racial, gender and classist biases. But, no “mutant algorithm” has yet received its just deserts in the courtroom.

So, how should we respond to algorithmic injustices? Should we grant algorithms deodand status and cross examine computers in court?

‘Computer says no’

Algorithms are simply a logical “set of rules to be followed in problem-solving”. Today, they are used in technology for a variety of purposes. In the US, for example, algorithmic decision making (ADM) is used by certain banks, human resources teams, university admissions, health services and the criminal justice system. This means that a citizen’s ability to get a loan or a mortgage, get a job, get into university, receive medical care and be treated fairly by the criminal justice system is at the discretion of a computer.

There is a reason why they are this widely used: algorithms can contribute a great deal to making our lives more efficient and fairer. Humans suffer from flaws such as biases, making mistakes or being inconsistent. An algorithm does not suffer from these flaws and therefore has the potential to make a positive impact on society.

But this does not mean that algorithms are perfect, especially when making decisions on nuanced human issues. From a computer science perspective, using algorithms to achieve policy goals is complicated and unpredictable. The use of big data sets and machine learning (where a computer independently interprets patterns that inform its decisions) give rise to instances where “computer says no” with unintended consequences that can breach the law or offend society.

And when something goes wrong there is a great deal of outrage. Recently, A-Level students angrily protested against the UK’s exam regulator, Ofqual, who used what Prime Minister Boris Johnson later described as a “mutant algorithm” that downgraded almost 40% of students’ grades, with a disproportionate effect on those from underprivileged backgrounds. Similarly, there have been troubling results from algorithms used in the justice system, whilst Facebook’s ad targetting algorithms have breached laws on discrimination as well as offended public sentiment about inequality.

As recent surveys have revealed, scandals like these only mean that people increasingly distrust algorithms that, despite their imperfections, offer important improvements to society. A common response to these issues is calls for increased transparency and accountability. But due to its technical nature, algorithmic accountability can be complicated to determine and difficult to communicate to those who do not have expert knowledge of computer science. These limitations on transparency have fuelled public frustration and distrust of algorithms.

So, is there a way to get justice (or at least a sense of justice) from any future mutant algorithms?

Want to write for the Legal Cheek Journal?

Find out moreDealing with unaccountable crimes

The possibility of bringing back deodands for algorithms offers an interesting solution. The justice system’s long history with inanimate objects reveals a great deal about the social function of trials.

From a technical perspective, putting inanimate objects on trial is madness. Yet, ancient Athens developed a special court called the Prytaneion that was dedicated to hearing trials of murderous inanimate objects. Policing and applying law to these objects was also of great importance. In Britain, from 1194 until 18th century, it was the duty of a coroner to search for suspicious inanimate objects that could have played a role in a sudden or unexpected death, whilst determining the fate of criminal objects was frequently a topic of heated debate for senior statesmen in ancient Greece. Why?

Both in ancient Greece and Britain, these trials played an important role in responding to unaccountable (due to the absence of a guilty human), yet traumatic crimes. For the ancient Greeks, murder was especially traumatic because they felt a shared responsibility for the crime. This meant that they spent a great deal of time and money symbolically providing a narrative for the rare occasions when a murder was unaccountable (as happened with the statue of Theagenes of Thasos).

Moreover, the variation in juries’ conclusions reflects how these trials were primarily concerned with social justice within specific communities rather than broad legal rules. This can clearly be seen from the regional differences in Medieval British deodands in horse and cart accidents. In one case in Oxfordshire, juries felt that a cart and its horses were responsible for the death of a woman called Joan. In another case, only a specific part of a cart was deemed guilty of murdering a Yorkshireman, whilst its fellow parts were spared. But in Bedfordshire, the blame for the death of a man named Henry was pinned upon a variety of culprits: the horses, the cart, the harnesses and the wheat that was in the cart at the time of the incident.

From the Prytaneion to Parliament Square

Deodands not only provided a mechanism for developing a communal narrative to crimes where justice is hard to find, but have also replicated compensatory justice. Fast forward to the industrial revolution in 19th century Britain and communities were faced with injustices created by new technology.

Like algorithms, trains and railways incited outrage and fear in the 1830s and 1840s. In 1830, William Huskisson, the then MP for Liverpool, was so terrified when he got off a train on a new passenger line that he ran off the platform and onto the rails. His death was a landmark railway passenger casualty that overshadowed calls from engineers, architects and enthusiasts to embrace the wonders of the “iron road” in the minds of the public.

This led to a strong revival in deodand cases. Trains were being put on trial and train companies had to pay whatever the jury felt was fair. In the absence of widespread life insurance, deodands for the murderous trains were set at very high prices in order to compensate victims. Ultimately, the sympathy of jurors led to excessively costly rulings and provoked parliament to abolish deodands in the UK. Nevertheless, the 15 years in which they were effective allowed for a form of compensatory justice to develop in reaction to new and traumatic technology, which helped to boost public confidence in trains.

In the same way, algorithms have great potential to help create better solutions and a more equal society. The problem is that the public does not trust them. Just as the “iron roads” were feared in the 1830s, today the menace of an all-powerful black box algorithm dominates their public perception. The potential effect of granting algorithms deodand status is twofold. First, putting computers on trial publicly provides a performative and ceremonial sense of justice, similar to the ancient Greeks trials in the Prytaneion. This ceremonial justice has already been highlighted by artists like Helen Knowles. Second, providing compensation that is legally designated to a guilty computer communicates a direct and powerful sense of legal accountability to the public.

Although I doubt taking algorithms to the Supreme Court in Parliament Square is high on the UK Digital Taskforce’s agenda, when considering policy for algorithms, it might be worth investigating the age-old legal and communications trick of the deodand.

William Holmes is a penultimate year student at the University of Bristol studying French, Spanish and Italian. He has a training contract offer with a magic circle law firm.

Please bear in mind that the authors of many Legal Cheek Journal pieces are at the beginning of their career. We'd be grateful if you could keep your comments constructive.